The world of AI model training continues to evolve… btw, big shout out to Meta this week on the release of Llama3. While I haven’t had a chance to play with it myself, there have been some very impressive reports about its abilities and from what we hear, its most impressive aspects have yet to be released.

Many of our customers are spending equal amounts of time looking at inference and AI model serving. After all, the only way to unlock the value generated when training or fine tuning a model is to start using it to transform business processes.

This is why VAST is very excited to introduce our partnership with Vapor IO and the fact that Vapor IO has decided to deploy the VAST Data Platform as a part of its Zero Gap AI offering.

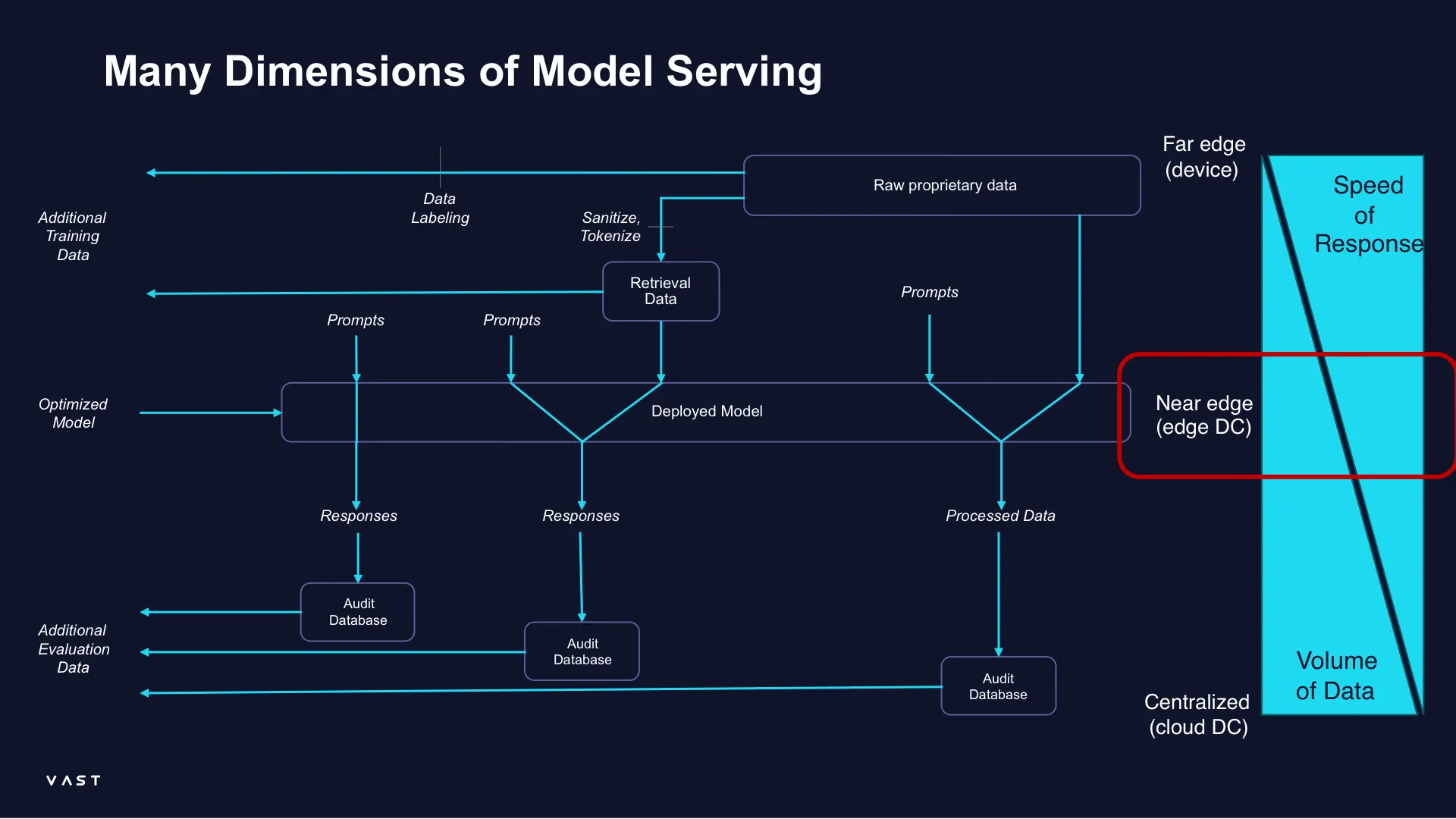

VAST and Vapor IO are working to solve the many challenges involved in deploying AI models across the entire continuum from edge to core to cloud. Arising especially in customer-facing scenarios, these challenges include:

1. Model serving capacity

Once you have an AI model that you piloted and you are happy with its behavior, you need to deploy it at scale. In doing so, you want to: a) minimize the latency between the user and the model b) provide adequate bandwidth; this is especially important for multi-modal models c) ensure that adequate GPU resources will be available

All three of these are critical to ensuring the responsiveness of the deployed model, especially as usage grows. This is where Zero Gap and the partnership between Vapor, SuperMicro and NVIDIA shine. By leveraging Vapor IO’s Kinetic Grid platform, Vapor IO is able to provide ultra-low latency access to multiple locations in 36 U.S. cities hosting SuperMicro MGX servers with NVIDIA GH200 Superchips.

Whether you are a hospital, a retail store, a factory or warehouse, an entertainment venue or municipality, you can deliver an experience as if you had GPU-based servers in the facility without having to deal with the associated headaches. And be assured that if your usage surges, Vapor IO’s ultra-low latency network will be able to meet the demand by using nearby GPU resources.

2. Availability of enterprise data for retrieval augmentation

The next big challenge is the availability of real-world data. Generative AI is very convincing... at times, too convincing. And so for many use cases, AI models are most useful when retrieval augmented generation (RAG) is used. RAG enables AI models to combine external data with the knowledge it gained during training to provide more accurate and reliable responses. The challenge with RAG is one often needs access to a large amount of sometimes frequently changing data. This is where the VAST Data Platform can help in several ways. First, it provides a high-performance repository for customer data in each Zero Gap city. VAST provides multiple ways to get data on the platform ranging from traditional file and object interfaces to more modern approaches like streaming data directly by using Kafka. Next, it enables that data to be rapidly used to update a vector database that the AI model can in turn search very efficiently. Lastly, the VAST DataSpace ensures that all locations have access to the same data without having to constantly replicate it. This means customers who have deployed RAG-enabled AI models on Zero Gap can provide responses based on data gathered across the country without slowing down the model.

3. Keeping track of prompts and responses.

AI by its very nature is non-deterministic. Ask a generative AI model the same question 20 times and you get a variety of answers. For this reason, it’s critical to track all prompts and responses to and from a deployed AI model. This is true regardless of modality: text, speech, images and video all need to be stored.

Further, in most cases, the deployment of an AI model consists of several models. One is a generative model that crafts the initial response, but it is typically accompanied by more traditional ML models that examine the dialog for things like sentiment (is the customer satisfied or frustrated?) and business risk (is the model exposing confidential data or making promises it shouldn’t be?). Storing and managing all this data is challenging in one or two locations, never mind dozens or hundreds. This is where the VAST DataBase comes to the rescue, providing the ability to store structured, tabular data. Further, VAST has integrations with tools like Apache Spark that enable users to perform table scans or generate projections from billions of rows within seconds. By leveraging the VAST DataBase to store prompt, response, user, model, vector DB and many other details, users of the Zero Gap AI platform enjoy full traceability of all AI operations.

4. Model repository and versioning.

AI models are no different than the code you deploy in your applications. There are going to be multiple versions in use at the same time.

Take Llama3, for example. If you had deployed a model based on Llama2 you are very likely going to pilot a version of that model based on Llama3. Assuming that pilot goes well, you are going to want to start rolling it out. More importantly, since your embeddings have likely changed between the models if you are using RAG, you are going to need two copies of your vector database, depending on which model is being used. Vapor IO and VAST make this process easy. Between the tooling from Vapor IO and the VAST DataSpace and VAST Catalog, users can not only have many different versions of their models, but also have a complete audit log of which models are being used in which sites using which data sources.

5. Model security.

As I mentioned earlier, if a customer had to deploy their own dedicated environments for model serving, it would be an extremely slow, painful, and expensive process, and frankly, it would be a significant barrier to the adoption of AI. At same time, the need to secure these environments should not be underappreciated. Not only does all raw data need to be encrypted and access to it controlled, but a bad actor hijacking AI models to masquerade as your company and perform damage at a very rapid rate is a very real concern. Zero Gap AI is a multi-tenant platform that can address these concerns by using a Zero Trust approach to AI model security. Starting at the physical layer, access to facilities is tightly controlled, leading up to network-level security measures that ensure complete isolation of traffic between tenants. This is further fortified by using hardened operating systems, Trusted Platform Modules (TPMs), and secure software bills of material to safeguard the operating environment against compromise. Leveraging tenant identity systems and external key managers ensures that each tenant maintains full control over their users and data. Additionally, providing immutable snapshots guarantees that historical data can meet legal hold standards. Full auditing of all data access operations is conducted, alongside active monitoring enabled by machine learning to swiftly identify and quarantine suspicious behavior. Through the collaborative efforts of Vapor IO, SuperMicro, NVIDIA, and VAST, customers gain access to an enterprise-grade AI model serving platform at a price point that facilitates easy adoption.

We're excited about this combination of VAST and Vapor that provides an ideal solution for enterprises looking to rapidly and cost-effectively scale their use of AI. Read more about the news in the Vapor IO press release and blog post.