When Douwe Kiela talks about enterprise AI, he doesn’t start with models. He starts with the problem of context.

Kiela, who pioneered Retrieval-Augmented Generation (RAG) at Facebook AI Research and now leads Contextual AI, believes we’ve reached a strange inversion point: our language models can solve Olympiad-level math problems and generate passable code, but they still struggle to understand a company. Not just its facts or documentation, but its structure, its habits, its silences.

This is what he calls the context paradox—the realization that things that seem trivially human, like placing information in its proper setting, are far more elusive to machines than the tasks we long thought hard.

What we’re watching unfold, he argues, isn’t the failure of AI itself. It’s the failure of applying general-purpose intelligence to deeply situated problems.

Enterprise, as it turns out, is nothing if not situated. “Large language models are great at solving math problems,” he says, “but terrible at understanding your company.” And it’s not because they’re flawed—it’s because they’re disconnected. From data. From intent. From domain-specific nuance. From…well, context.

So the problem isn’t the model. It’s everything else. “Models are 20% of the value,” he says. “The other 80% is everything around them.”

And it’s that 80%--retrieval infrastructure, ranking, chunking logic, observability, eval tooling, access control, compliance–that turns capability into utility. It’s also where most companies stumble, because too many teams treat the model as the product.

But a great model inside a bad system is still a bad product. You can duct-tape GPT-4 to a knowledge base and get something that looks like an assistant. But what you won’t get is trust.

The mistake, then, is thinking too generally. Chasing general-purpose systems when the real value lives in deep specialization.

“AGI is exciting,” he concedes, “but solving real problems in the enterprise requires specialization.” Systems must reflect the structure and knowledge of the domain they serve. Anything less is just another surface-level UX experiment.

The raw material for that specialization–the source of differentiation–isn’t found in benchmark datasets or model weights, it’s in the company’s own chaos. The archived PDFs. The support transcripts. The outdated guides. The institutional memory that doesn’t live in any one place but somehow gets used every day. That’s the moat. “Don’t wait to clean your data,” Kiela says. “Build AI that thrives in the mess.”

He argues the mess isn’t a problem, it’s actually an advantage, that is, if your system can metabolize it. And once it can, you’ve built something defensible.

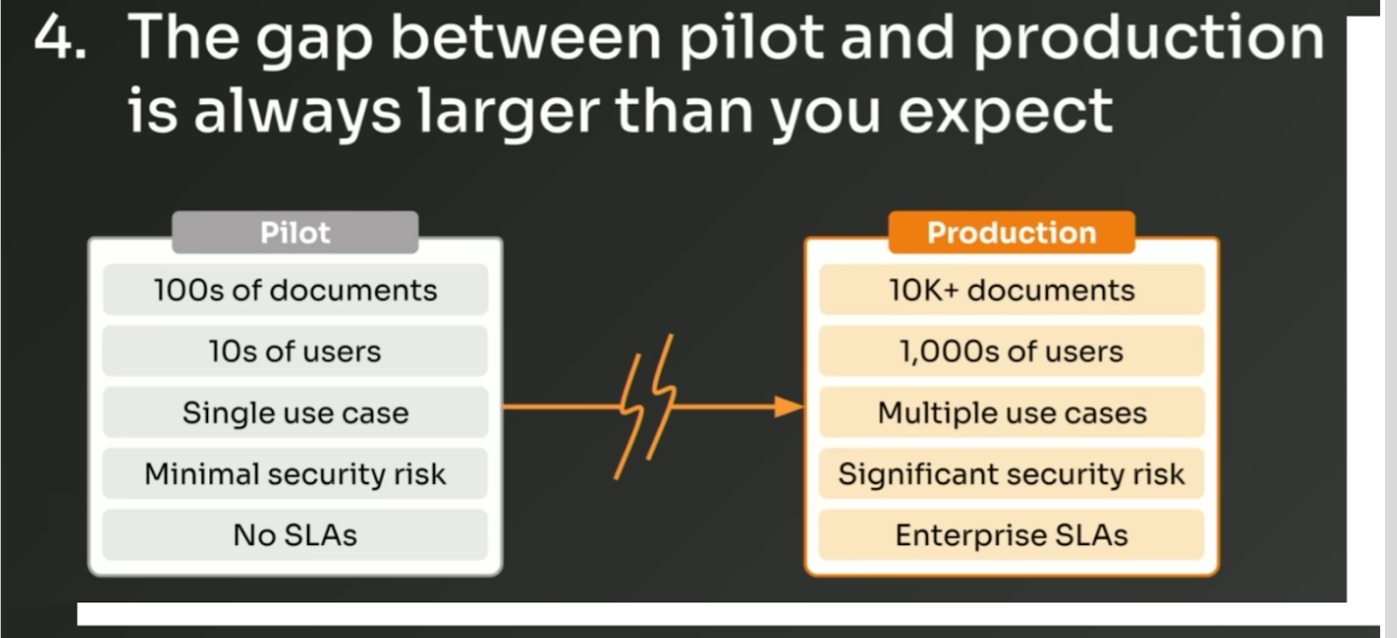

Of course, getting there means breaking the shallow-product cycle. Kiela is blunt on this point: pilots are easy. Polished demos running on a hundred documents with a handful of testers? Everyone can build that now. But real production–systems running across millions of documents, at latency, behind firewalls, under compliance oversight–hat’s where the gap widens. And it widens fast.

You can’t solve for it by perfecting a pilot. You solve for it by designing for production from the beginning. That means ruthless prioritization. Architecting for observability and auditability from day one. And above all, it means shipping early. Not to internal champions, but to the real users—the ones who have no patience for cleverness.

“You don’t need to be perfect,” Kiela says. “You need to be useful.”

In enterprise AI, usefulness is what creates belief. When a user finds something they didn’t even know existed–a seven-year-old document that suddenly answers the question that’s blocked them for weeks–that’s the spark. “There’s this moment,” he says, “when someone finds the answer they didn’t even know existed–and they’re never the same again.”

That moment matters more than accuracy metrics. Accuracy, after all, is now table stakes. What matters is knowing when the system is wrong—and why. Traceability. Attribution. An audit trail that justifies the answer. In enterprise, trust isn’t earned by being right. It’s earned by being accountable.

“We live in a special time,” he says. “AI is going to change our entire society. You have a chance to shape that—so aim for the sky.”

And if that sounds grandiose, it’s only because most of the industry has been playing small. The ambition isn’t the risk. The risk is building tools no one needs.