Few people outside the datacenter appreciate how fundamentally storage performance dictates the economics of large-scale AI. It’s traditionally been easy to overlook, especially amidst the glitter of GPUs and trillion-parameter models.

But behind the curtain, storage is the unsung hero or, depending on how you look at it, the villain of AI performance. Without careful architecting, GPUs can starve, burning budgets and leaving data scientists frustrated.

It’s exactly this scenario that CoreWeave aimed to avoid when Jeff Braunstein, CoreWeave’s Product Manager for Storage, conducted rigorous performance tests, which he detailed at Nvidia’s GTC and in an extensive post here.

“High performance storage is critical to the success of AI model training workloads,” he said during his GTC breakdown of the results. “As the complexity and size of these models continues to grow, the demand for storage systems has intensified.”

As Braustein explained, “We're seeing increased data volumes for AI models, they’re continuing to grow in size. Customers are also using more and larger types of data. They're using multimodal training data, and there's a need for fast data access to GPUs.”

He adds that “traditional cloud storage services really just aren't fast enough, they aren't really built for these AI workflows. So what's really needed are stored solutions that are optimized for high IO and large datasets across large numbers of GPUs.”

The problems come down to cost. “As we get to these larger and larger training models, or models with trillions of parameters, the cost to train these models is skyrocketing.” He explains that over the past year, they’ve seen that this size explode on the road to billion-dollar training runs. “Anything that can be done to decrease the training times, increase the performance, and as far as data and storage goes, is going to really help keep these costs as low as possible

At this scale, even modest improvements in storage throughput can dramatically shift economics. Conversely, each moment of GPU idleness due to insufficient data access is a direct, measurable financial loss. Storage performance is no longer merely technical—it's strategic.

To illustrate this, he shared data from CoreWeave’s own infrastructure. A representative example involved a training run across 4096 Nvidia Hopper GPUs generating massive throughput demands. He says they see peak read rates of 70 gigabytes per second, and 50 gigabytes a second for checkpoint writing.

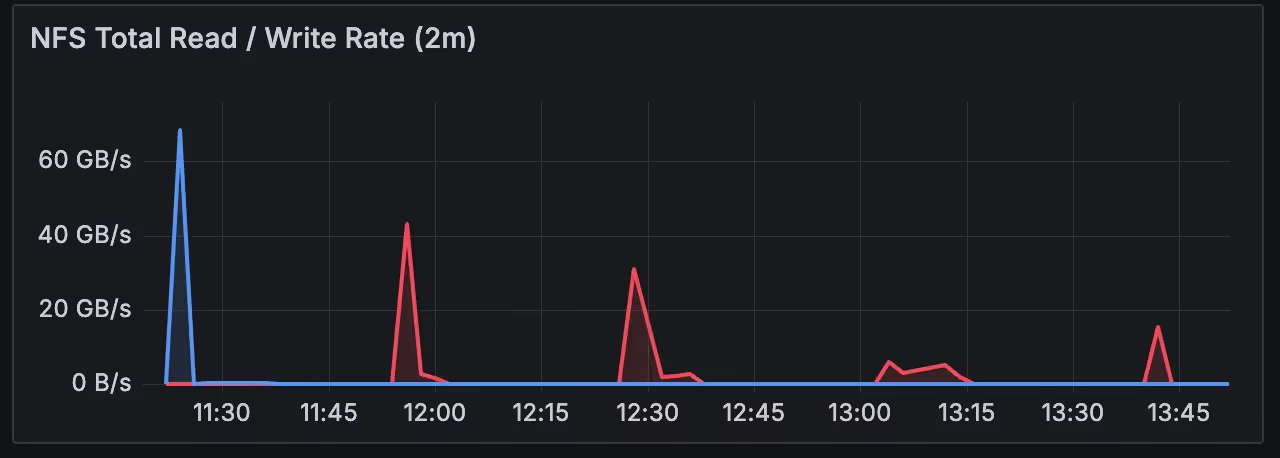

Braunstein also highlighted typical observability metrics CoreWeave shares with customers, showing periods of lowered GPU utilization directly corresponding with data read and checkpoint operations. GPUs (red representing high utilization) often showed reduced utilization precisely when waiting for data. In short, efficient storage isn’t merely beneficial, it’s mandatory.

To quantify their storage capabilities precisely, CoreWeave ran a series of benchmark testsdesi gned to simulate realistic AI training scenarios. The fundamental goal was: to confirm whether CoreWeave’s distributed file storage could sustain one gigabyte per second per GPU while scaling up to hundreds of Nvidia GPUs. This threshold, Braunstein said, represents the critical baseline for modern AI workloads.

Tests utilized the flexible FIO (Flexible IO tester) benchmark tool on CoreWeave’s bare metal Kubernetes architecture incorporating H100 GPUs and BlueField DPUs, to optimize data handling efficiency.

The data infrastructure backend employed for these tests was VAST, utilizing standard NFS protocols through Kubernetes.

The benchmarks systematically scaled from smaller clusters (8 GPUs per node) up to large configurations (64 nodes, 512 GPUs total), conducting comprehensive sequential and random read/write scenarios. Braunstein highlighted the rigorous approach, including multiple block sizes tested, averaged throughput measurements, and careful network utilization analysis to isolate performance constraints.

Early results at smaller scales were promising. On a single server node with 8 GPUs, CoreWeave’s storage solution delivered impressive throughput. “We had 88% of the networking throughput maximum of 12.5 gigabytes per second,” This comfortably surpassed their one-gigabyte-per-second per GPU target.

But could performance hold steady at large scales? To answer, CoreWeave ramped up to their largest tested scale: 64 GPU nodes, each equipped with 8 H100 GPUs, totaling 512 GPUs. Braunstein shared the definitive outcome clearly: “We saw a total aggregate throughput of 508 gigabytes a second across those 64 servers, which results in 7.94 gigabytes a second per node,” essentially matching the critical one gigabyte per second per GPU benchmark at scale.”

Checkpoint writes, essential operations in AI training, also excelled. With 64 GPUs, sequential writes hit a robust throughput: “We saw a maximum throughput of 78 gigabytes per second at a 32-megabyte block size,” Braunstein explained, underscoring this rate was actually “in excess of one gigabyte a second” per GPU.”

While these infrastructure benchmarks are critical, Braunstein emphasized that storage efficiency doesn’t end with hardware. Intelligent data management strategies are equally vital.

For instance, CoreWeave integrates high-speed node-local NVMe storage directly into their infrastructure at no extra cost so customers can use caching and buffering strategies seamlessly. Braunstein noted how these temporary repositories dramatically reducing data-transfer bottlenecks.

He also detailed prefetching techniques, explaining the significant performance benefit from staging data locally ahead of GPU demand and subtle yet critical performance details such as data organization, in this case careful directory structuring and data sharding. These seemingly minor decisions, Braunstein insisted, substantially affect throughput as workloads scale. Optimal directory structures, Braunstein noted, were especially important for reducing “hot spotting” and related storage inefficiencies.

Pipeline optimization (preprocessing and data transformation) was another crucial dimension. Braunstein noted such techniques reduce latency significantly by offloading data preparation tasks from the storage system itself.

The final critical strategy Braunstein shared was proactive monitoring and error handling, essential in Kubernetes environments running sensitive AI workloads. Braunstein specifically noted CoreWeave’s Kubernetes architecture provided customers with deep insights about what's going on during their workflows, allowing immediate corrective action to preserve reliability and performance.

These benchmarks tell an important story: large-scale AI training demands have outstripped traditional storage architectures.With AI models pushing trillion-parameter boundaries, infrastructure must evolve or face obsolescence.

The takeaway is that In the new era of large-scale AI, effective storage isn’t merely technical plumbing, it’s strategic and economic. By ensuring storage reliably sustains aggressive throughput demands, AI teams can fully exploit GPU investments, reduce costs, and accelerate innovation.

As Braunstein’s benchmarks revealed vividly, storage efficiency isn’t about minor optimizations, it’s about redefining how infrastructure fundamentally operates.